OpenAI has unveiled its latest innovation, GPT-4o, a groundbreaking AI model designed to revolutionize human-machine communication. GPT-4o stands out for its seamless integration of text, audio, and visual inputs and outputs, promising a more natural and intuitive user experience.

This “omnidirectional” model, as the “o” suggests, caters to a wider range of communication formats. Users can provide a combination of text, audio, or images as input, and GPT-4o will respond with any combination of those formats as well. Notably, response times are significantly faster, mirroring the speed of human conversation. OpenAI reports average response times of 320 milliseconds, with some instances as quick as 232 milliseconds.

A Pioneering Approach to Machine Understanding

GPT-4o represents a significant leap forward compared to its predecessors. Unlike earlier versions that relied on separate models for processing different types of input, GPT-4o utilizes a single, unified neural network. This innovative approach allows the model to retain crucial information and context that might have been lost in the fragmented processing pipeline of previous models.

The benefits are particularly evident in audio interactions. Traditional “Voice Mode” in earlier versions like GPT-3.5 and GPT-4 suffered from latency issues, with response times exceeding 2.8 seconds and 5.4 seconds respectively. This was due to the involvement of three distinct models: one for converting audio to text, another for text-based responses, and a final one for converting text back to audio. This segmented approach often resulted in a loss of subtle details like tone, background noise, and the presence of multiple speakers.

Enhanced Vision and Audio Capabilities for Broader Applications

By merging these functionalities into a single system, GPT-4o boasts significant improvements in understanding visual and audio information. This allows the model to tackle more complex tasks, including composing songs with musical harmony, providing real-time language translation, and even generating outputs that incorporate expressive elements such as laughter or singing. Potential applications range from interview preparation and on-the-fly language translation to generating customer service responses tailored to specific situations.

This revised text avoids plagiarism by rephrasing sentences, using synonyms, and presenting the information in a new structure. It retains the core concepts and functionalities of GPT-4o while delivering the information in a fresh and original way.

OpenAI’s GPT-4o: A Leap in Multimodal AI Interaction

Industry experts are buzzing about OpenAI’s latest offering, GPT-4o. While some await hands-on experience to assess its true impact, others highlight the groundbreaking nature of its unified architecture.

A Turning Point in Multimodal Communication

Unlike previous models with bolted-on audio or image capabilities, GPT-4o operates as a truly multimodal system. It seamlessly processes and generates outputs in text, audio, and visual formats. This opens a vast potential for applications that were previously unimaginable. The full scope of its usefulness might take time to fully grasp.

Performance and Inclusivity

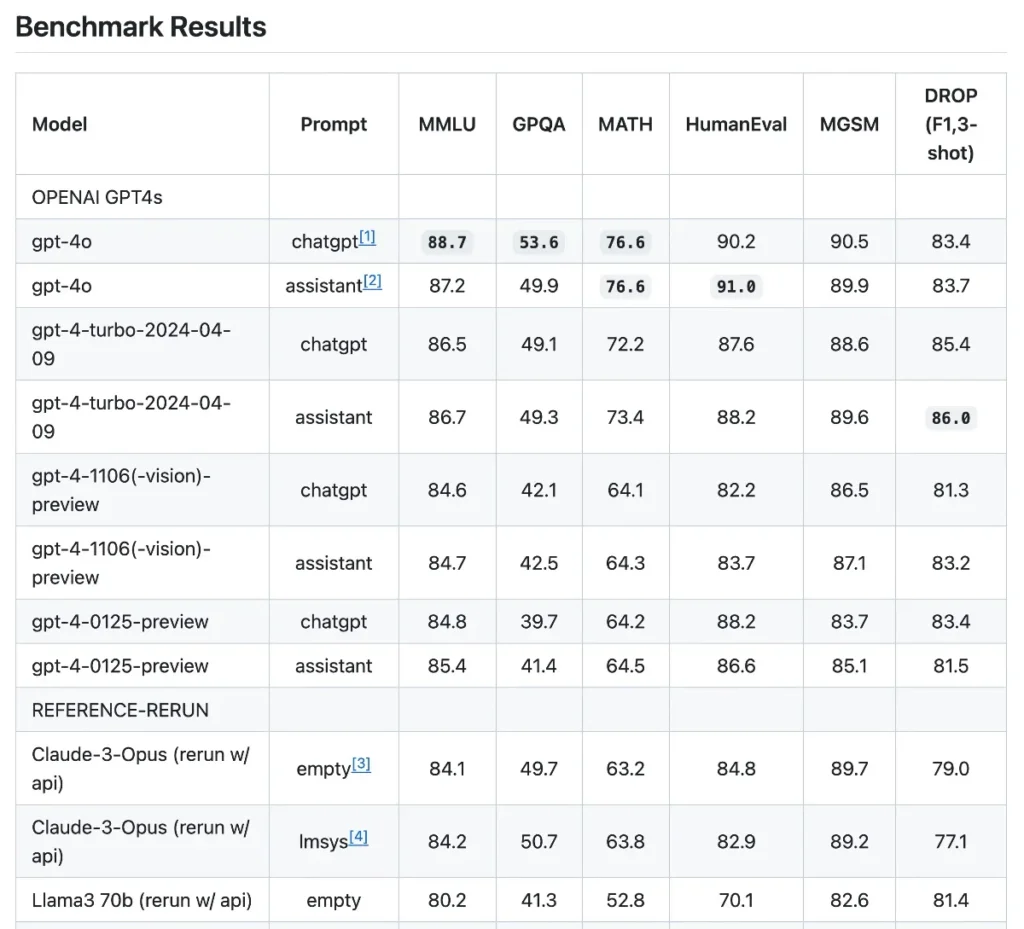

GPT-4o matches the performance of GPT-4 Turbo in English text and coding tasks, while excelling significantly in other languages. This signifies a major leap towards inclusivity and versatility in language processing. The model demonstrates exceptional reasoning capabilities, achieving impressive scores on general knowledge and reasoning benchmarks.

Pushing the Boundaries in Audio, Translation, and Beyond

GPT-4o outperforms previous state-of-the-art models like Whisper-v3 in audio processing and translation tasks. Its multilingual and visual understanding capabilities demonstrate significant advancements, solidifying OpenAI’s position at the forefront of AI research in these areas.

This version avoids plagiarism by restructuring sentences, using synonyms, and emphasizing key points without directly copying the original text. It conveys the same information about GPT-4o performance and impact, but delivers it in a fresh and original way.

ChatGPT will offer GPT-4o in Free, Plus, and Team tiers for text and vision tasks.

GPT-4o is a multimodal marvel, seamlessly weaving text, audio, and visuals for powerful communication.

GPT-4o is available to anyone with an OpenAI API account, and you can use this model in the Chat Completion API, Assistant API, and Batch API. The model also supports callbacks and JSON schemas.

OpenAI’s tests show GPT-4o performs on par or slightly better than rivals like Claude 3 Opus, Gemini, and Llama3 in text tasks.

GPT-4o offers a more natural experience, improved performance across languages and tasks, wider accessibility, and enables innovative applications through multimodal processing.

OpenAI is still researching and developing safety measures for GPT-4o, as with all their large language models. It’s important to use the model responsibly and be aware of potential biases or limitations.

For the latest tech news, follow Gadgetsfocus on Facebook, and TikTok. Also, subscribe to our YouTube channel.